Can ChatGPT Pass the Supply Chain Test? Blue Yonder Reveals Benchmark Study for LLMs

There’s been a lot of talk about how generative AI will change working in the supply chain, and we wanted to test that hypothesis.

In our experiment, we explore how capable Language Learning Models (LLMs) are out-of-the-box and if they can be effectively applied to supply chain analysis to address the real issues faced in supply chain management.

LLMs, like ChatGPT, are a type of artificial intelligence trained on massive amounts of data, which allows them to learn patterns, grammar, and semantics of language. Over the past few years, LLMs have exploded in growth and are used in a range of applications worldwide, including content creation, customer service, market research, and more.

IDC data reveals that Software and Information Services, Banking, and Retail industries are projected to allocate approximately $89.6 billion towards AI in 2024, with generative AI accounting for more than 19% of the total investment.

This rapidly evolving technology offers businesses increased creativity, efficiency, and decision-making capabilities – which have the power to revolutionize industries and processes.

So how do LLMs currently handle supply chain situations with no context?

The Generative AI Supply Chain Benchmark

Our generative AI supply chain test is based loosely on the viral ChatGPT experiment, where the model passed the bar exam with a high combined score of 297, approaching the 90th percentile of all test takers. By passing the bar with a nearly top 10% score, LLMs demonstrate the capacity to comprehend and apply legal principles and regulations.

This groundbreaking achievement sparked global conversation and highlighted the transformative potential of AI. We decided to take this conversation further by discovering how leading LLM models faced supply chain exams. We had them face off against two standardized tests, the CPSM and the CSCP, to see if they too could act as supply chain professionals, understanding the niche rules and context of the supply chain industry with no training.

We designed the experiment to programmatically run each LLM through the practice tests, with no context around the test, no access to the internet and no coding ability. This was to test how the LLMs would perform straight out of the box, providing a consistent and unbiased evaluation.

Both the CPSM and the CSCP are multiple-choice tests. Rather than have the LLMs simply select an answer, we set up an output for the models to explain each choice they selected. This approach allowed us to gain valuable insight into the model’s reasoning process and understand why it was getting answers wrong or right, helping us evaluate each model’s abilities.

After updated versions of the LLMs were released, we ran the test again this summer to collect new benchmark results.

Can LLMs pass supply chain exams?

Impressively, the LLMs performed surprisingly well at the supply chain exams without any training.

While most models achieved a solid passing grade, Claude 3.5 Sonnet stood out, securing an impressive 79.71% accuracy on the CPSM certification. On the CSCP exam, OpenAI’s o1-Preview and GPT 4o models edged out Claude Opus, scoring 48.30% accuracy compared to the latter’s 45.7%.

While LLMs performed well in certain areas, they also showed limitations, particularly when faced with mathematics-related questions or deeply domain-specific questions.

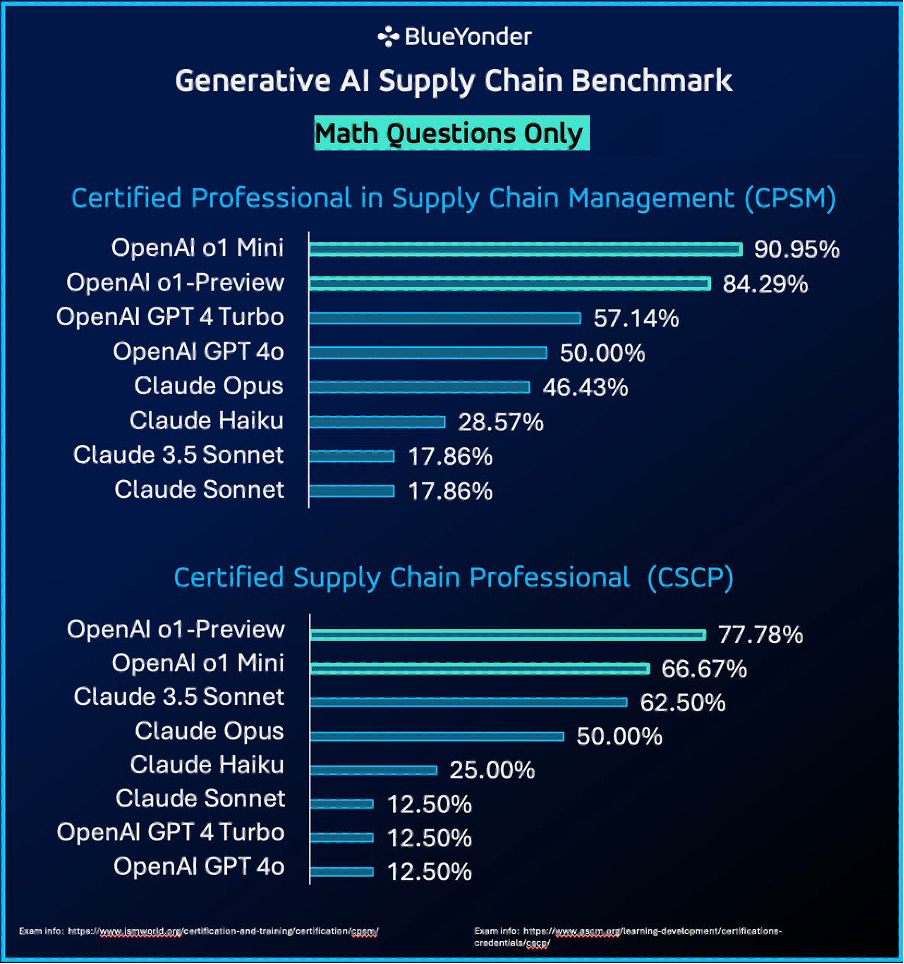

When examining only the math problems in each certification exam, OpenAI o1 Mini showcased a significant improvement in accuracy for OpenAI models, outperforming the Claude models tested.

These results were generated based on no context – so let’s explore what happens if we start giving the LLMs more context.

Stage 2: Adding internet access

In the next stage of testing, we gave the LLM programs access to the internet – allowing them to search using you.com. With that added capability, OpenAI GPT 4 Turbo achieved the most significant advancement from 42.38% to 48.34% on the CSCP test.

When looking at questions that were initially missed on the first no-context test, the Claude Sonnet model achieved an accuracy rate of approximately 53.84% on the CPSM questions and 20% for CSCP questions.

While this new access allowed the models to search the internet independently, it also introduced the potential for inaccuracies due to unreliable information sources.

Stage 3: Providing context with RAG

For the next test, we used a RAG (Retrieval Augmented Generation) model, providing the LLMs with study materials from the tests.

Using RAG, the LLMS outperformed both the no-context and open internet access tests on non-mathematical questions, achieving the highest accuracy scores for both CSCP tests.

Stage 4: Coding abilities

Finally, for the next test, we gave the models the ability to write and run their own code using Code Interpreter and Open Interpreter frameworks.

Using these frameworks, the LLMs could write code to help solve the mathematical questions in the exams, which they struggled with in the first iteration of the test. With this test, the LLMs outperformed the no-context test by an average of approximately 28% in accuracy across all models for mathematical questions.

Can LLMs be used for supply chain problems with no context?

On the whole, the LLM models passed the supply chain exams. This performance presents a very exciting possibility for integrating LLMs into supply chain management. However, the models aren’t perfect yet. The models struggled with math problems and specific supply chain logic.

By giving the models the ability to write code, they were able to overcome many of the math problems – but still needed very specific supply chain context to solve some of the more complex questions within the exam.

Luckily, that’s where we excel at Blue Yonder. We’re committed to harnessing the power of generative AI to create practical, innovative solutions for supply chain challenges. Our newly launched AI Innovation Studio is a hub for developing these solutions, bridging the gap between complex AI technologies and real-world applications.

Our focus is on creating intelligent agents tailored to specific roles within the supply chain, ensuring that these agents are equipped to solve the real, authentic problems and challenges faced right now.

Find out more about our agents with Wade’s ICON talk, as well as the initial stages of this test and how businesses can take advantage of Generative AI to unlock productivity in supply chains.